ORION MUSIC FESTIVAL 2022 PRODUCTION TECH

aka how we tried to make it better.

Contents

TEAM

The production crew at Orion Music Festival had an active role in managing the stage, controlling the lights, directing the cameras, filming the event, provided content and handled the low-latency streaming chain from the DJs all the way through to the Twitch broadcast.

This year we grew the team size considerably (doubled in fact), brought on fantastic new talent in lighting and festival broadcasting experience and were humbled by the professionalism of our volunteers.

OMF 2021 WAS A GREAT TEACHER

What did we learn?

• How to push and optimize our worlds within the constraints of VRSL to make a performant and polished experience.• How to improve our stream quality dramatically and how to set up both the world and our software to minimize frame drops and improve frame rates both in-game and in our encoders.• The importance of taking breaks and how having extra hands when used effectively, can greatly expand our capabilities.• Give yourself much more time for your objectives throughout the year than you think is needed, and try to lock down changes well before your event so that you can properly QA everything.

VR Stage lighting

VR Stage Lighting (or VRSL) is a major component of Orion Music Festival and is the backbone of the Orion Music Festival stage production. VRSL is a collection of shaders, scripts, and assets designed to bring the professional-grade stage production communication protocol, DMX512, directly into VRChat. This allows us to control lights, effects, and shaders directly in VRChat through our stream using professional lighting hardware and software. This allows us to bring a more dynamic and immersive show that extends equally across all instances and eliminates any chance of desynchronization during delivery.

For OMF 2022, we took advantage of the expansions that VRSL had over the year and introduced new lighting fixture types as well as brought back much-improved legacy ones. This allowed us to be laxer with our world design performance targets and push for a much more elaborate and immersive world design.We were also able to expand our lighting team up to 2 lighting desk engineers that brought beautiful shows to each DJ. This also ensured that we could keep a consistent quality along the entire show as our lighting desk could now implement shifts to ensure their stamina throughout the show. Running a lighting show fully 100% live takes a lot of energy, time, and focus, and much like real stage lighting, it is a proper career path that should not be taken lightly.

The creation of the first Orion Music Festival was to show off the potential of the VRSL project, and now Orion Music Festival has gained a life of its own. OMF, however, still strives to be the leading event in the utilization of VRSL; pushing its limits and showcasing professional standards to the masses, and inspiring VRChat users to utilize VRSL in fun and creative ways we have not yet seen.

DELIVERING THE WORLD STREAM

We wanted to raise the bar for the quality of the streams from each of the DJs this year, ensuring a high level of visual and audio fidelity. We worked closely with each artist to ensure they could deliver 1080p30 h264 encoded video, along with the best-in-class AAC CoreAudio (Apple) audio.

This year we provided a transparent mask image to each DJ, which allowed them to hide any areas of their stream where their visuals were present but outside of the UV space on the video screens, as this would not be seen in-game.This was a huge deal as it meant that only roughly half of the 1080p canvas accounted for changing pixels in the encoder, so the DJs were able to greatly reduce pixelation and artifacts, whilst operating with the limited bitrate available to them.

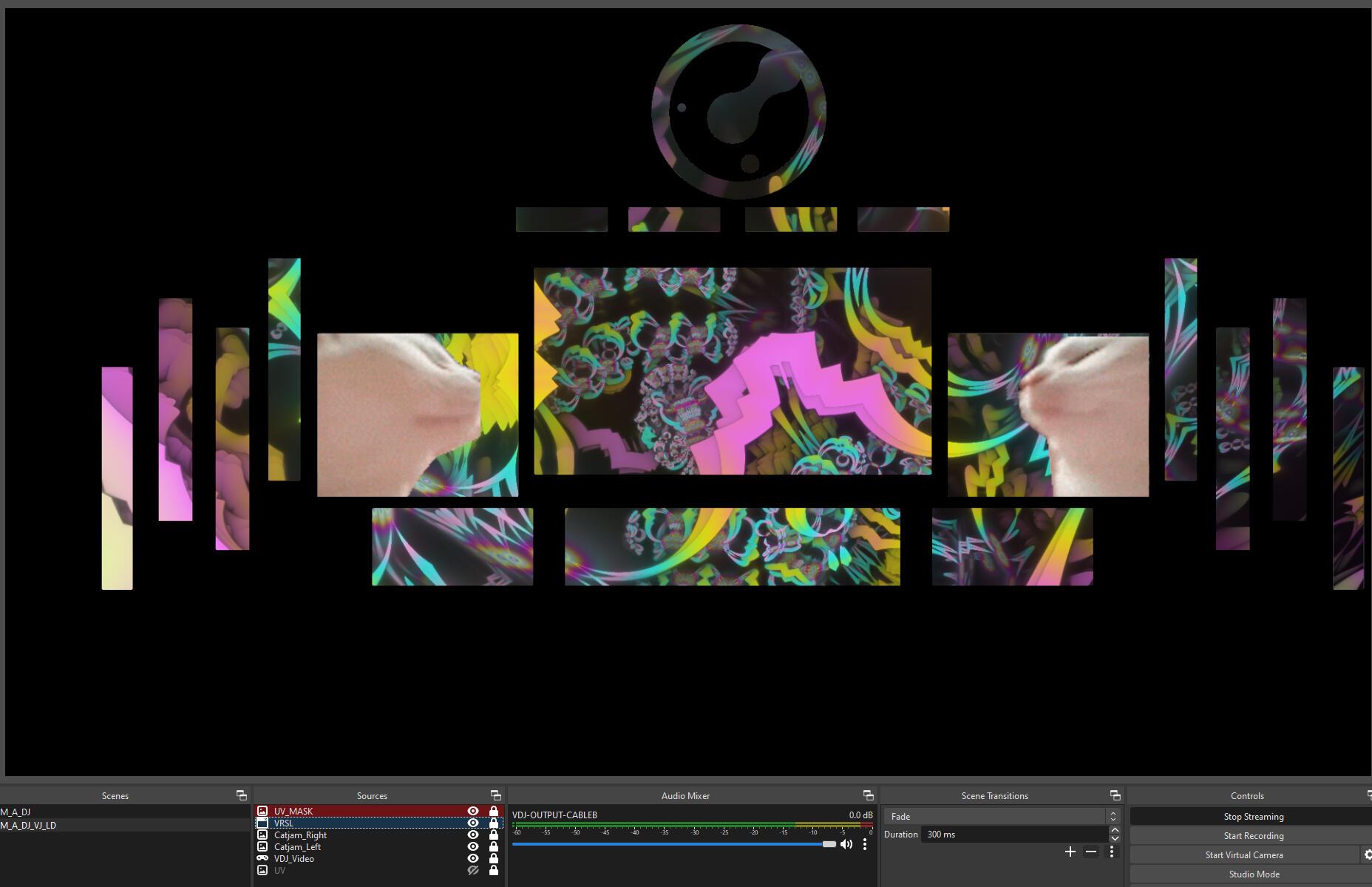

Our Lighting Designers then took the RTMP streams directly on to their PCs and then performed their live (busking) lighting show on top of the DJ's music. A dedicated OBS compositor PC (as shown below) would then pick up the current LD's stream and then relay this back to a specific stream key that was hard coded in the OMF event world.

To achieve all of this, we relied on VRCDN to provide the low-latency delivery service and configured a CBR of 4500Kbps across the streams. Unfortunately, the timing of the festival meant that we were also subject to extreme networking conditions from the onslaught of a Hurricane against the East coast, which did negatively impact the performances.We also made sure to resync the stream for all users in the instance with the camera stream user at the end of every set, in order to reduce the impact of micro stutter related desynchronisation with the video player to the Twitch stream, which continues to be a problem for long duration events.

VIDEO MIXER

Last OMF we set ourselves the challenge of implementing a camera control system in a full world of 80 people. We knew this would be taxing on our computers, and ultimately we were only able to show a preview and a programme camera:

During the process of creating OMF 2022 we wanted a far more robust and versatile system to control the cameras than last year, having complete visibility of several cameras similar to a Blackmagic vision mixer, but avoiding the performance penalty of rendering the world simultaneously several times with a full instance.

Orels kindly provided us much-needed assistance not only by taking our extremely rigid specifications for a multi-camera system and turning it into a working prototype, but also providing a solution that allowed us to reduce the performance impact from having all cameras active in the world.This was achieved by having specific control over the frame rates of the cameras, with the ability to force each one to take turns rendering their viewpoint. This tuning would also automatically drop down in frame rate based on the Video Director's own in-game FPS.

This was also enhanced with AcChosen's MIDI mapping system, so that we could bind MIDI keys, save and recall these settings for each of the Video Director's individual hardware.

Furthermore, we were able to map faders, encoders and pads to manipulate the zoom/pan/speed & starting locations of multiple cameras, allowing us complete control as Video Directors to set-up each shot before the next cut was made.

By also providing a mechanism to send the output (or programme) of the cameras to a single user in the world, we then had ourselves a working broadcast solution.

CAMERAS

We ran with 8 cameras in world, which were a combination of manually triggered, static shots and a drone camera on an animated loop around the dance floor and stage.One of these cameras was the hand-held camera, tirelessly operated by Kanami and Leafynn.The hand-held camera is unique in that it has a couple of features that assisted the VR camerawork and the festivalgoer's interaction with it:1) Tally Lights - both the viewfinder and front end of the camera automatically provide a red visual indicator when the Video Mixer cuts live to it. The camera operator also has a green indicator to show when the Video Mixer is in preview.

2) Horizon Leveling - In order to keep the broadcast from being too shaky/wobbly, this mechanism constantly attempts to keep the horizon of the synced camera object level, whilst not intruding on the camera operator from being able to achieve the angles necessary for their shots.

The idea of implementing a hand-held camera system wasn't initially obvious to us until we saw the cameras used by the VRDancing community. Notably when they were used during their Marshallthon fundraising event, where multiple hand-held close ups were taken throughout the coverage, and we loved it.

The hand-held camera is moved around as a networked synced object, rather than relying on externally provided streams from the camera operators, which has been a common approach in other events.Although in our case we needed to keep our stream user static in the world, rather than physically attached to the camera object, as we had to make some extremely long shots without compromising the DJ and dancer movements.The main reason we contain everything in-world, as we did with OMF 2021, is to ensure the performance is kept in perfect sync and consistency maintained between different shots for the broadcast.One downside of doing it this way is that we're unable to leverage out-of-the-box systems such as VRCLens, instead requiring us to build all of the equivalent functionality from the ground up, but it does mean that anyone can be a camera operator without any setup on their side.

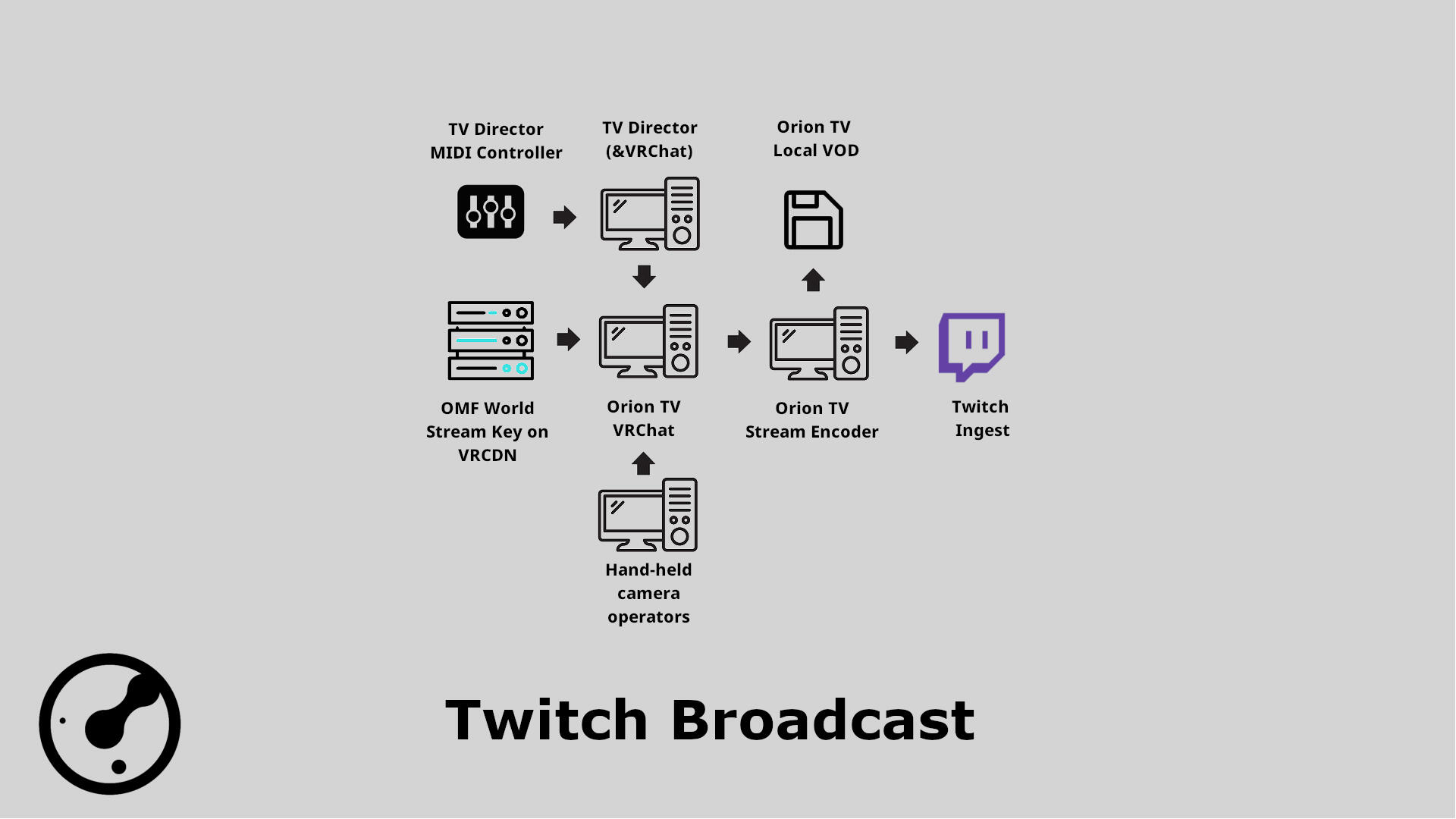

TWITCH BROADCAST

In order to show the event to everyone outside of VRChat, we continued with the same approach as last year with the use of a dedicated, high-specification Gaming PC running VRChat in desktop mode. This desktop user would have the Video Mixer's programme camera sent to them with the use of a screen-space shader to overwrite their vision.We then used a Camlink 4K capture card, to output the Gaming PC to a separate OBS Stream computer that was configured with multiple scenes containing Orion specific overlays, stinger transitions, and custom video content. We then sent this to Twitch at 1080p60 using 8000Kbps.

We paid careful attention to positioning the location of the desktop user in-world to ensure maximum IK and Physics bone resolution. To help maintain performance, we disabled as much unnecessary data as possible, such as turning off player audio and using distance-based avatar hiding to prevent rendering users over at the world spawn.

It was originally agreed in the run up to OMF 2021, that the use of a desktop client would be the preferred solution and it continues to be the best choice available for our production today. Combined with using the latest in PC gaming hardware components, allowed us to maintain the highest frame rates possible for the VOD recording and Twitch output.

| VRChat PC | Stream Encoder |

|---|---|

| AMD Ryzen 7 5800X3D | AMD Ryzen 7 5600X |

| 64GB RAM | 32GB RAM |

| NVIDIA 3090RTX | Intel ARC A380 |

Benchmark testing prior to the event, before and after upgrading the VRChat PC to an AMD Ryzen 7 5800X3D revealed a staggering increase to the game's performance. It really does like 96MB of L3 V3 V-Cache.We then duplicated the entire US-based two-PC broadcasting setup in the UK, with the same configuration for redundancy, which thankfully we did not have to use.

ART & VIDEO

A number of members within the team helped contribute content and pitched ideas to AcChosen for the world creation leading up to the event. However, Mearmada and Vianvolaeus were instrumental to a lot of the posters, advertising and product placement around the city, and put out an absolute ton of content to help make the place livelier and more vibrant.

For OMF 2022 Mearmada came up with a fresh design that we used in all of the branding, stream overlays and posters for the event, and worked closely with our social media manager Leafynn, to create some fantastic and informative imagery.

Vianvolaeus created OMF 2022 branded bracelets that were given out free to download in the event Discord, along with handy instructional videos to guide users on how to add them to their Avatars.As an added bonus, AcChosen applied a custom shader to the bracelet that reacted to specific DMX channels used during the performance, which allowed the LDs to create more of a dynamic with the crowd!

Something that we also wanted to expand more on this year, but unfortunately, we ran out of time to focus on, was additional content for the stream side. We wanted to explore themes that used some similar humour that you might find in outrageous product advertisements in games like Cyberpunk 2077 or Robocop.We reached out to Thiopental to see if they were interested, and given the short timeframe remaining, was able to put out this great clip:

This short advert ran only a couple of times during the event, between DJ sets, but may have been easily missed. We definitely need to explore this more in the future!

FUTURE PLANS

After the event finished we quickly started work on a post-mortem, and how we could have approached things better.Specifically on the streaming side there's a number of avenues we want to investigate over the coming months. We're looking at GPU-based cloud instances for stream compositor/aggregation, the use of more reliable streaming protocols to ensure delivery of content between the artists and the crew, and even the possibility of using AV-1 encoding to Twitch (should they start supporting the codec), allowing for 1440p broadcast.We would also like to work with more original content creators for increased artwork and high quality memes.Watch this space and hopefully see you all soon!

THE ATTENDEES & VIEWERS

To everyone that supported us leading up to and during the event, even it was just to visit briefly or dance for hours, enjoy their favourite DJ or check out the stream...

Thank you for making it all worthwhile.